How is data modeling in Python server applications done? Explore with us our open-source SQLAlchemy project to find out.

In our previous post, we explored the structure, strengths, and main components of a server/client architecture. In this design, user interaction and business logic are handled by two separate programs, which communicate via HTTP requests to exchange data.

If you decided that this architecture is what your project needs, you’re in the right place to learn more: we will explore the design of one such application to understand how to build it.

sem

The program we will examine is sem, written by the author of this post; its source code is currently shared on github. The goal of this software is to offer a straightforward, simple manager for domestic expenses, allowing recording and querying of the relative data.

I chose for sem a server/client design mainly to allow future extensibility with various possible interfaces (command-line, web, graphical…), which I would implement separately. Following the ideas discussed in our last post, I chose the technologies to use to implement sem based on my design goals and requirements.

- I chose Python as programming language. Performance was not an issue here, since

semwas meant for small-scale use. The faster Python development process (as opposed to compiled languages) was therefore the leading consideration. - SQLAlchemy is a mature Python ORM library, which simplifies many aspects of working with databases. I chose to use this framework, as opposed to direct low-level database access, for simplicity and interoperability with the language.

- I decided to use PostgreSQL, a server-based database system, as my database backend. Its versatility combines with the simplicity of use and deployment given by SQLAlchemy, essentially removing possible downsides (slightly more complex connection syntax, etc.).

- I preferred FastAPI as API framework due to its simplicity of use and integration with other Python libraries (

pydanticfor type validation, to name one).

In this post we will start exploring the implementation of sem, beginning with the first steps: how to generally structure the program, and how to model data using Python and SQLAlchemy.

Application Layers

The modules of sem are stored in a modules/ directory in the project root. These are the files contained within, which we will explore one by one as these posts go along:

├── modules

├── api.py

├── cli.py

├── crud_handler.py

├── main.py

├── models.py

├── schemas.py

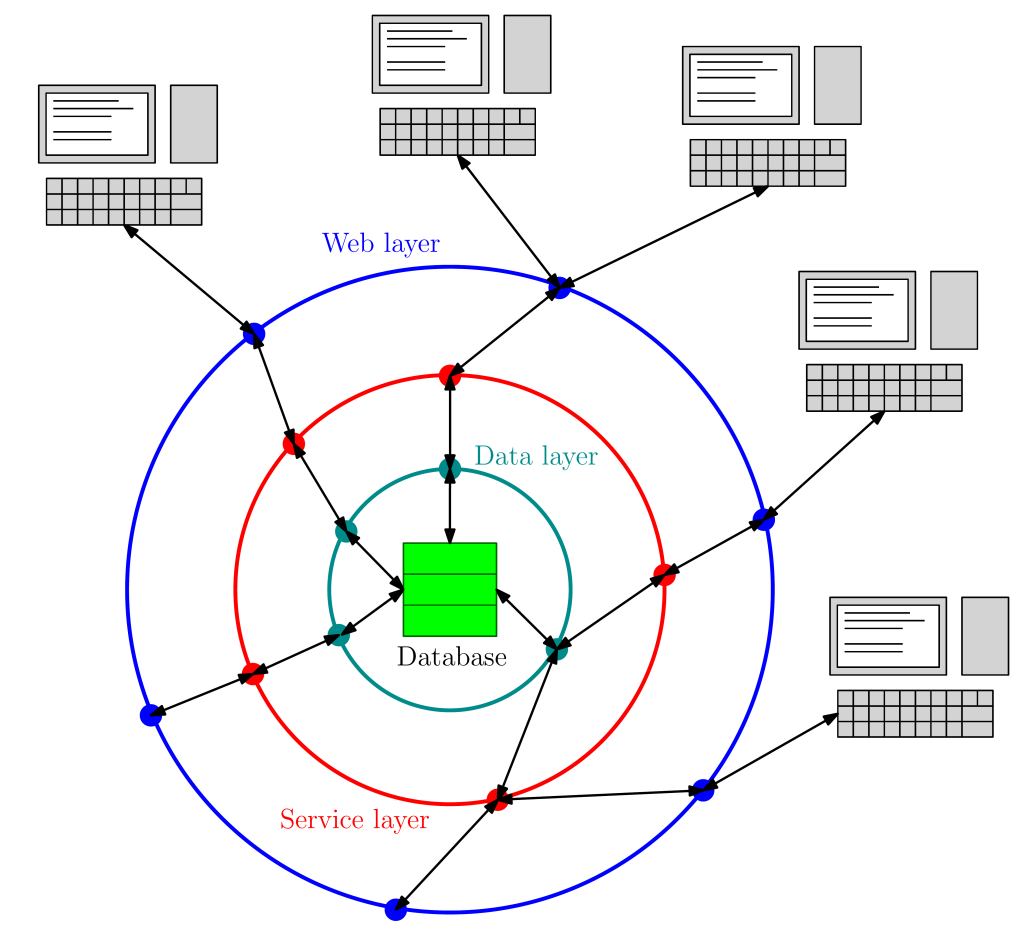

└── session.pyAll of these modules (except cli.py which is devoted to a simplified command-line interface, and main.py which launches the server) are linked to one of three layers of functionality which any server application must have, in one form or another:

- The data layer concerns direct manipulation of the database.

models.pyis strongly linked to this part of the program, since it defines the data model which the database will use. - The service layer is an intermediary between the data layer and the API.

crud_handler.pyis the main module here, since it defines a class (CRUDHandler) which wraps the database for API usage. This object uses the functions insession.pyto actually connect to the database, whileschemas.pycontains the definitions of the types used by the methods ofCRUDHandler. - The web layer contains the API and is the one that users and other services pass through to interact with the server. API definitions are contained in

api.py.

While not strictly necessary (for instance, one could merge the service layer with one of the others) structuring the application in this way ensures flexibility and make testing easier.

The latter aspect is crucial: while developing each of the layers, I made sure to immediately write testing modules and functions, to make sure everything worked as intended. We will move our discussion of testing to a future post, to keep the discussion simpler at this stage.

Now that the general structure of the application is clear, we need to understand the general model of our data.

Data Model

Choosing the right model for your data is key to simplify their management and to obtain good performance from your program.

In some cases, the best solution is splitting data in different tables which will be queried together via joins, to avoid redundancy and increase performance. In my case, database design was simpler, and one table was enough to efficiently deal with my data needs.

Like in our example in the previous post, I classified my data entities (expenses) using the following fields:

- A unique ID field, which will distinguish each expense (playing the role of primary key of the table). Primary keys are not strictly necessary, but it is always a good idea to have one, usually chosen to be integer.

- A date field, the day in which the expense was made. SQL databases have builtin date types, which SQLAlchemy will interface with the Python

datetime.datetype. - A type field, which will classify the expense. This will be a string.

- An amount field, representing the amount of money spent as a floating-point entry.

- A description field, a string field which will contain a longer description of the expense.

Besides these, I decided along the road to add a category field, a second string field for higher-level categorization. This may be useful in case, e.g., sem is used to classify the expenses of multiple members of a family, to record who performed them. In contrast, I use type for lower-level classification (for instance, “food” or “leisure”).

Now that the model is defined, we can begin coding, creating the Python types which will encode our data structure.

Data Modeling in Python Server Applications

Now we know what data model we should follow: we still need to understand how the actual data modeling is done in Python server applications like sem.

We will answer this question exploring the models.py module, which contains the data model for our expenses in a format which SQLAlchemy can use to set up a database.

The module begins with a module docstring, where the contents of the module are specified (divided by type, such as Classes or Functions). This is useful for the programmer, to have a quick reference of file contents, and will be used by the automatic documentation system. In the following, we will also see docstrings for functions and classes (except in the API, where the documentation system is different).

# modules/models.py

1 """Database mapped class.

2

3 Classes

4 -----------------------

5 Base

6 Inherits DeclarativeBase, base class for mapped objects.

7 Expense

8 Class modeling database entry.

9 """This is followed by the main section of the file, where the Python class encoding the model of the data is defined.

SQLAlchemy offers several possible interfaces to define and manage models and data. sem uses the declarative style, available in newer SQLAlchemy versions (2.0 or more recent), which allows deeper integration with Python.

In the declarative paradigm, models are defined as derived classes of the DeclarativeBase type (the usual idiom is to use an intermediate Base class, from which the actual model class will inherit).

# modules/models.py

34 from datetime import date

35

36 from sqlalchemy.orm import DeclarativeBase

37 from sqlalchemy.orm import Mapped

38 from sqlalchemy.orm import mapped_column

39

40

41 class Base(DeclarativeBase):

42 """Inherits DeclarativeBase, base class for mapped objects."""The newly defined Base

__tablename__is a string, the name of the table which will store the information of the objects of the derived type.metadatais an attribute of typeMetaData, which will be used at a later stage to create the schema (i.e., the tables required to store our data) in the database.

The model is then defined similarly to a Python dataclass, by specifying its fields and providing type annotations. Instead of directly using standard Python types, however, annotations in SQLAlchemy models use the Mapped[<type>] construct, which gives the required information to the framework.

# modules/models.py

45 class Expense(Base):

46 """Expense class.

47

48 Attributes

49 -----------------------

50 id : int

51 ID of the expense, primary key field.

52 date : date

53 Date of the expense.

54 type : str

55 Low-level group of the expense.

56 category : str

57 High-level group of the expense. Default is "".

58 amount : float

59 Amount of the expense.

60 description : str

61 Description of the expense.

62 """

63

64 __tablename__ = "expenses"

65

66 id: Mapped[int] = mapped_column(primary_key=True)

67 date: Mapped[date]

68 type: Mapped[str]

69 category: Mapped[str] = mapped_column(default="")

70 amount: Mapped[float]

71 description: Mapped[str]This code will instruct SQLAlchemy that the Expense type will be used in the program to model database entries, and that it should create a single table named expenses with the requested fields.

SQLAlchemy also allows to handle more complex situations, in which two or more data types are stored in separate tables, to be joined on request.

The mapped_column() function is used to specify field information which goes beyond the simple data type. In my code,

idhas been set as the primary key of the table using theprimary_keykeyword argument. This field will be indexed on and auto-inserted by the database.categoryhas been given the""default value (used if the field is left unspecified in an object of typeExpense) via thedefaultkeyword argument.

Before moving to the rest of the program, I also added a string representation method for the model. This will be called when a string encoding an object of type Expense is requested:

# modules/models.py

73 def __repr__(self) -> str:

74 """Dunder representation method."""

75 return f"""Expense(

76 id={self.id!r},

77 date={self.date!r},

78 type={self.type!r},

79 category={self.category!r},

80 amount={self.amount!r},

81 description={self.description!r}

82 )"""We get the string representation of each field (via the !r operator), which is then interpolated in the main result string.

What’s Next?

We have chosen the tools, understood our data needs, and seen how to perform data modeling in Python server applications.

In the next installment of this series, we will see how to write an object (CRUDHandler) which will actually create our database and perform operations on it.

Author: Adriano Angelone

After obtaining his master in Physics at University of Pisa in 2013, he received his Ph. D. in Physics at Strasbourg University in 2017. He worked as a post-doctoral researcher at Strasbourg University, SISSA (Trieste) and Sorbonne University (Paris), before joining eXact-lab as Scientific Software Developer in 2023.

In eXact-lab, he works on the optimization of computational codes, and on the development of data engineering software.