Source: https://www.pexels.com/photo/steel-container-on-container-dock-122164/

In our previous post, we saw how to execute, build documentation, and prepare testing for a server application. Today, we will understand how to deploy our server application via docker containers, and we will see some ideas on how to write a simple command-line interface (CLI).

Introduction to Containers

Distributing and running applications across multiple platforms and operating systems can in some cases be rather cumbersome. Indeed, packages built for one Linux distribution may require re-packaging to be used on another and/or Mac OS (let alone on Windows, which has a rather different set of build tools).

This can in principle be solved by shipping the source code and letting the user build/run the program on its own system; however, this places the deployment onus on the user. Containerization offers an alternative, allowing straightforward building and set-up of software across a wide variety of platforms.

The basic idea behind containerization is to create a layer of emulation for an environment of choice, where the program will be run. This is similar in principle to the use of a virtual machine, which allows to emulate an operating system while running in another, with a few key differences.

Firstly, containerization offers in general much better performance because the emulation layer may be thinner, i.e., emulate less software. Almost-native levels of performance are therefore possible, making containerization an attractive option.

Furthermore, since every container is usually meant to run only one process, container setup process is usually simpler, avoiding cross-compatibility issues. For instance, as we will see, Python virtual environments are mostly not required, and one can simply install Python packages at the system level.

Finally, containerized services can be networked: multiple containers, each executing a separate process, can be set up to communicate and interact via HTTP requests. This allows to straightforwardly deploy microservice architectures, in which a program is broken up in smaller, independent units communicating over a network (with more complexity, but possible flexibility increases). These networks (mostly) isolate the containers from the host system, but still allow them to communicate with each other.

Server Container

To simplify the deployment of sem, I set up a container-based build process, complementing its poetry-based local deployment. I implemented it using the well-known docker system, as well as its docker-compose utility to manage the multiple containers required (see below). These programs allow to build containers using configuration files, and to run them in a container engine.

As is common for database applications, I prepared a container for the server, which will communicate with a separate container specifically dedicated to the database service. This section will explore the way in which the former is set up.

Containers are the combination of a process to run and an image, an environment where the software and setup required by the process are installed and implemented. Images are usually built adding layers (configuration/installation steps) on top of already existing images. The latter usually offer the functionalities of a particular program, programming language interpreter, or operating system.

Docker allows to create custom images and containers from the contents of Dockerfiles, configuration files which contain the build instructions, layer by layer. In sem, the database container only requires minimal setup, and does not warrant a dedicated Dockerfile; the server container instead is built from the instructions placed in docker/Dockerfile, which we will explore step by step in the following.

# docker/Dockerfile

1 # syntax=docker/dockerfile:1

2

3 # selecting python image

4 FROM python:3.11-slim

5

6 # creating a workdir

7 WORKDIR /app

8

9 # installing system packages, required for psycopg

10 RUN apt-get update

11 RUN apt-get -y install gcc postgresql postgresql-contrib libpq-devThe first step is choosing a base image upon which the server image will be built, using the FROM directive (by default, images are downloaded from the dockerhub online repository). Since the server program is written in Python, the natural choice is picking a Python image, which comes with a pre-installed Python interpreter and the pip package manager. In many cases, multiple versions of each image are available, allowing to choose the desired one.

The system emulated by the Python image installed here is based on Debian Linux (with minimal additional software, since I chose the slim image), with the corresponding filesystem. The WORKDIR directive creates a directory which will work as base for the rest of the instructions to be executed (building, copying external files, etc).

Due to our choice of the psycopg driver to access the database, we need to install some system (not Python) packages, which the driver will employ. This is done executing in the internal image shell system-specific commands, using the RUN command. Since the container is based on a Debian system, apt-get will handle package installation (note the -y option, which accepts at confirmation prompts, and is necessary due to the non-interactive nature of the installation).

Each of the steps above is one of the image setup layers: docker automatically saves checkpoints of each layer during construction, allowing subsequent builds to skip rebuilding layers until the first point of change. The order in which layers are executed therefore allows to simplify the build process during development (if frequently changed layers are placed at the end of the build whenever possible).

# docker/Dockerfile

13 # preparing separately requirement file,

14 # installation will be performed iif requirements change

15 COPY docker/requirements.txt .

16

17 # installing required packages

18 RUN pip install -r requirements.txtThe COPY directive allows to copy files from the host system to the container filesystem (here, to the working directory). The copied file (docker/requirements.txt) contains the dependency information of the project, in a format which the pip installer can understand, and can be generated by poetry with the command

$ poetry run pip freeze | grep -v '^-e' > docker/requirements.txt(here grep removes a line dedicated to installing the project package itself). Calling pip install -r with the requirements file as argument will install the project dependencies at a system-wide level on the container.

Note how pip, rather than poetry, is used to manage project dependencies here. poetry is a tool built on top of pip, which manages dependencies and builds virtual environments to run software without dependency issues with other programs. When containerizing, the latter aspect usually becomes unnecessary, since mostly only one program will be run in the container. Therefore, usually containers use pip to install Python packages at the system (container) level, where programs are also run.

# docker/Dockerfile

20 # copy all other local content host -> container

21 COPY . .

22

23 # launch command

24 CMD "docker/run.sh"At line 21, I copy the whole project directory to the working directory: this will allow the container to use the project files. Note how I copied docker/requirements.txt separately: if I had copied the whole project at line 15, changes in any project file would result in docker performing the installation process at line 18 again (and all the following layers). This highlights how layer planning optimizes image building during development.

At the final line, CMD is used to specify the command which will be executed when running the container based on the image. In the case of sem, this is the executable docker/run.sh bash script:

# docker/run.sh

1 #!/bin/bash

2

3 if [[ $SEM_LAUNCH == "docs" ]]

4 then

5 mkdocs build

6 mkdocs serve

7 else

8 python -m modules.main

9 fiBased on the value of the SEM_LAUNCH variable, the container will serve the documentation, or run a server instance which will remain active and listen for HTTP requests.

Container Composition

As we mentioned before, the server container will interact with a dedicated database container. The containers themselves, and the network they will interact over, are set up in sem using the docker-compose utility. Its configuration is contained in the docker-compose.yml file in the project root directory, which we will explore step by step.

# docker-compose.yml

1 version: "3"

2

3 services:

4 db:

5 container_name: sem-db

6 image: postgres:15.4-alpine

7 restart: alwaysThe services section contains a list of the services to be set up. We start with the db service, whose container will be named sem-db and will be based on a PostgreSQL image. The container will always be restarted when docker-compose is invoked.

When run as a container, the db service will launch an instance of the database service, which will remain listening for connections. This instance will not overlap with system ones, even though it will listen on the same port, since it will only be accessible from within the container network in our setup.

# docker-compose.yml

8 volumes:

9 - ./sem-db-data:/var/lib/postgresql/data

10 environment:

11 POSTGRES_USER: sem

12 POSTGRES_PASSWORD: sem

13 # required for the check below - otherwise postgresql

14 # will use undefined 'root' user and raise errors

15 PGUSER: semAt line 8, I create a volume, i.e., a shared host-container directory used to persistently store data between container executions. Here, I am linking the sem-db-data/ folder in the project root directory with the /var/lib/postgresql/data/ directory (the location where PostgreSQL stores database data) in the container filesystem. In this way, sem-db-data/ will persistently store database data, allowing to stop and resume execution of the container without data loss.

At line 10, I introduce some environment variables, which will be declared in the database container environment. These are default PostgreSQL variables containing the username and password used to access the database, as well as the user information for the healthcheck (see below).

# docker-compose.yml

16 healthcheck:

17 # postgresql starts up, stops, and then restarts

18 # => errors if the server connects before the stop

19 # this checks if the system is ready

20 test: [ "CMD-SHELL", "pg_isready" ]

21 interval: 5s

22 timeout: 5s

23 retries: 5The healthcheck directive allows to perform tests once the startup of a container is complete, to verify that the launch was successful: here, I used it to solve an issue with the PostgreSQL container.

Specifically, the PostgreSQL service starts and then stops immediately before restarting. If the server service (described below) attempts to connect to the database while the latter is inactive, the launch will fail. The healthcheck process set up here makes the database container wait a few seconds and then runs the pg_isready command, which checks the correct execution of the database service (after enough time has passed to allow PostgreSQL to restart).

When this is done, and on success, the container will broadcast that its state is healthy. Later, I will instruct the server service to wait for the healthcheck to complete before launching.

# docker-compose.yml

25 server:

26 container_name: sem-server

27 depends_on:

28 db:

29 condition: service_healthy

30 image: sem-serverThis section of the docker-compose file describes the server service, which will be run in a container named sem-server based on an image of the same name. I specified that this service should wait until the db service broadcasts to be in a healthy state before starting, to avoid connecting to the database service before it is ready.

# docker-compose.yml

31 build:

32 context: .

33 dockerfile: docker/Dockerfile

34 ports:

35 - 8000:8000

36 - 8001:8001

37 environment:

38 - SEM_DOCKER=1

39 - SEM_LAUNCHThe build section contains information on how to build the image associated to the service. Here, I specified to use the Dockerfile docker/Dockerfile, with the current directory as base.

The container will expose the ports 8000 and 8001, allowing the content offered on these ports by the service (namely, the server and documentation pages) to be reached by the host system as well, at localhost:8000 and localhost:8001.

Finally, the environment section allows to specify environment variables for the container environment. Values can be assigned in one of two ways:

- The variables can be created anew and given values, such as for

SEM_DOCKERabove, which I set to 1. The goal of this variable is to inform the server that docker-specific configurations should be sourced (see below). - Alternatively, environment variables can be forwarded from the host environment, like

SEM_LAUNCHhere. This variable, as seen indocker/run.sh, specifies whether or not the server program or the documentation server should be launched, and is selected when launching the container ensemble at the host level (see below).

Networking and Execution

Docker-compose automatically sets up a network containing the containers described in its configuration file. It also offers a Domain Name System (DNS), which allows to access containers using their names as URLs, rather than their container network IP addresses. This may require some changes in networking setups (by, e.g., replacing localhost with the container name).

Performing these changes was the final part of my containerization effort, and is done in the connection module (modules/session.py). I modified the earlier version of the file as follows:

# modules/session.py

31 import os

...

41 def init_session(database: str) -> Session:

...

54 DRIVER = "postgresql+psycopg"

55

56 if os.environ.get("SEM_DOCKER") == "1":

57 USER = "sem"

58 PASSWORD = "sem"

59 HOST = "sem-db"

60 else:

61 USER = "postgres"

62 PASSWORD = ""

63 HOST = "localhost"

64

65 PORT = "5432"

66

67 DB = f"{DRIVER}://{USER}:{PASSWORD}@{HOST}:{PORT}/{database}"

...The value of the SEM_DOCKER environment variable is extracted using the os.environ dictionary, which stores environment variable values as strings. The docker-specific or the generic database specifications are selected based on the value of SEM_DOCKER, which can be set at launch. Note how the container name (sem-db) is used as base URL when in the containerized environment.

The container setup discussed here can now be launched. This is done using the docker-compose command, specialized below for normal server execution and documentation serving, respectively:

$ docker compose up --build

$ SEM_LAUNCH="docs" docker compose up --buildThis command will rebuild the containers at every call (thanks to the --build option) and launch the container ensemble with the passed command-line options.

The process can be simplified by writing a makefile:

# makefile

1 .PHONY: docker docs

...

6 docker-run:

7 docker compose up --build

8

9 docker-docs:

10 SEM_LAUNCH="docs" docker compose up --build

...

15 run:

16 poetry run sem

17

18 test:

19 poetry run python3 -m pytest --ignore=sem-db-data/ -x -s -v .

20

21 requirements:

22 poetry run pip freeze

| grep -v '^-e' > docker/requirements.txt

23

24 docs:

25 poetry run mkdocs build

26 poetry run mkdocs serveThe makefile automates most of the steps required to launch the server, both locally and using a container ensemble. For instance, the command

$ make docker-run(possibly requiring administrative rights) will launch the container ensemble set up as above.

Command-Line Interface

Good client design involves careful consideration to pick the best options and frameworks based on the needs of the user.

A very popular option is the use of web-based graphical interfaces, web pages which translate user input (collected using graphical controls) in HTTP requests to a server. Here we will explore a much simpler but still relatively versatile option, in the form of command-line interfaces.

The standard framework to build this type of interface in Python is argparse, a library which allows to parse command-line arguments, options, and sub-commands. Commands can then be composed on a UNIX shell, with default values, some degree of validation, and more features. This is especially useful when shell features (e.g., shell wildcard expansion, interaction with other shell tools) may have fruitful interactions with the program (e.g., if the program accepts multiple filename arguments).

In sem, I implemented a rather simpler (but for some aspects more versatile) command-line interface, which works on an interactive Python shell. Specifically, the module modules/cli.py defines a few Python functions which receive user input and use it to perform HTTP requests to the server. The module begins with some general setup:

# modules/cli.py

48 import os

49

50 from fastapi.encoders import jsonable_encoder

51

52 import requests

53

54 # `rich` works for tables and general printing

55 from rich.console import Console

...

58 # `colorama` works for input() in docker

59 from colorama import Fore

60 from colorama import Style

61

62 from modules.schemas import ExpenseAdd

...

66 console = Console()

...

69 # Emphasis formatting - colorama

70 EM = Fore.GREEN + Style.BRIGHT

71 NEM = Style.RESET_ALL

72

73 if os.environ.get("SEM_DOCKER") == "1":

74 server = "http://sem-server:8000"

75 else:

76 server = "http://127.0.0.1:8000"colorama and rich are two libraries which allow complex output formatting (colored text, tables…). rich is a more complete framework, which usually would be sufficient on its own. In this instance, however, I used both since rich displayed incorrect behavior when launching the command-line interface in the containerized setup.

After defining an object of type console.Console, which will perform the printing, and setting emphasis and reset characters, I defined the address of the server which should receive requests in the server variable (note the distinction between the containerized and non-containerized case).

We will examine here the add() function, which allows the user to specify the data of an expense to add to the database.

# modules/cli.py

87 def add():

88 """Add an Expense, querying the user for data."""

89 date = input(f"{EM}Date{NEM} (YYYY-MM-DD) :: ")

90 typ = input(f"{EM}Type{NEM} :: ")

91 category = input(f"{EM}Category{NEM} (optional) :: ")

92 amount = input(f"{EM}Amount{NEM} :: ")

93 description = input(f"{EM}Description{NEM} :: ")

94

95 response = requests.post(

96 server + "/add",

97 json=jsonable_encoder(

98 ExpenseAdd(

99 date=date,

100 type=typ,

101 category=category,

102 amount=amount,

103 description=description,

104 )

105 ),

106 )

107

108 console.print(response.status_code)

109 console.print(response.json())After receiving the data from the user via the input() Python function, the function sends a request to the server at the /add URL. This is done via the post() function of the requests module, which offers methods to send HTTP requests to URLs of choice (effectively implementing client-like functionality).

The json argument of the function allows to pass a request body (here obtained by serializing an ExpenseAdd object, built with user-specified data). The status code and body of the response to the request, returned by the post() function, are then printed to the screen.

The rest of the module contains similar functions, which allow the user to send requests to all the endpoints of the API. The module can be launched interactively with

$ poetry run python3 -im modules.cliwhen running locally (note the -i option, which loads the module in an interactive Python shell). When running in a containerized setup, I use

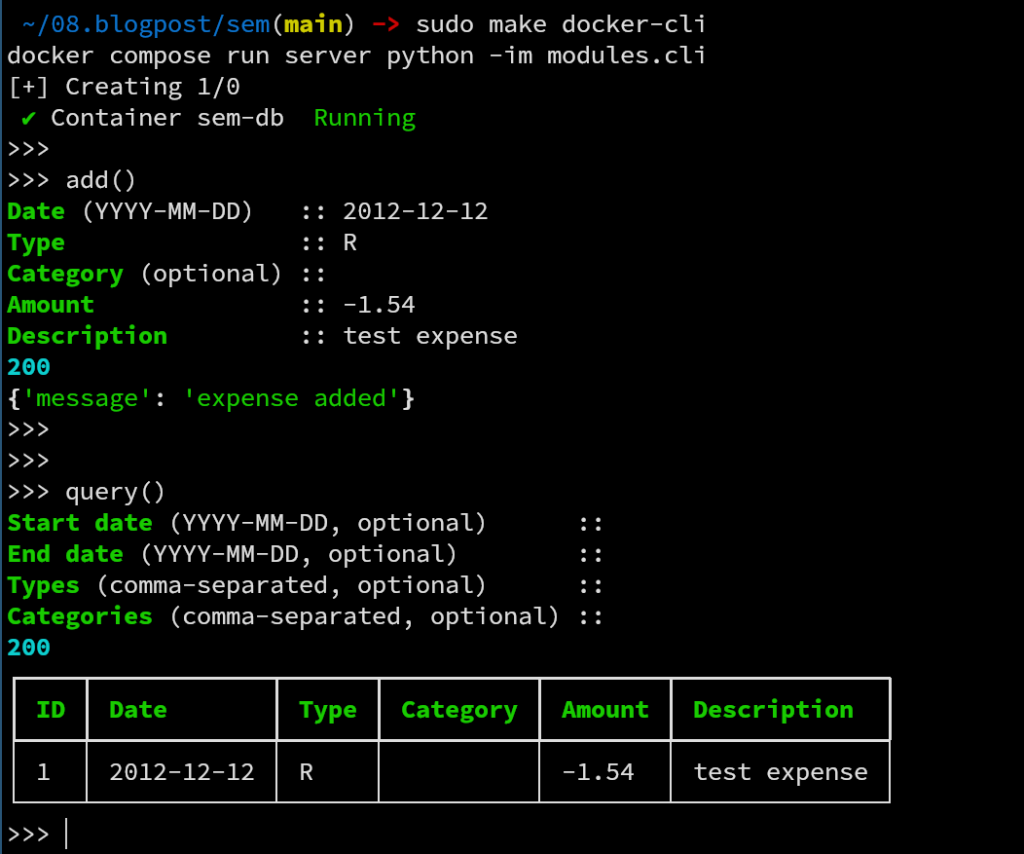

$ docker compose run server python -im modules.cliwhich executes a duplicated instance of the server container, where the final command (normally, launching the server) is overridden to load the CLI module in an interactive shell. This simplifies setup, since a CLI container is not needed.

Both the local- and container-based CLI launch commands are included in the project makefile (as the cli and docker-cli targets, respectively). An example of usage of the CLI may look like

where I call the add() function, to add an expense, calling then the query() function, to list the expenses contained in the database in a formatted table (via the utilities in rich). In the call to query(), no values were passed to the filters, making the system print all the stored expenses.

Author: Adriano Angelone

After obtaining his master in Physics at University of Pisa in 2013, he received his Ph. D. in Physics at Strasbourg University in 2017. He worked as a post-doctoral researcher at Strasbourg University, SISSA (Trieste) and Sorbonne University (Paris), before joining eXact-lab as Scientific Software Developer in 2023.

In eXact-lab, he works on the optimization of computational codes, and on the development of data engineering software.