In this post, we will understand how to setup modules and tools to run, document, and test our codebase.

Sources: https://pxhere.com/en/photo/1188160, https://www.publicdomainpictures.net/en/view-image.php?image=424040&picture=rapid-test-corona-test

In our previous post, we saw how to design an API using the FastAPI Python framework. Today, we will understand how to execute, build documentation, and setup test modules for a Python project.

Dependency Management and Execution

The entrypoint of sem is placed in the modules/main.py module:

# modules/main.py

26 import uvicorn

27

28

29 __version__ = "1.0.0"

30

31

32 def main():

33 "Execute main entrypoint."

34 uvicorn.run("modules.api:app", host="0.0.0.0", reload=True)

35

36

37 if __name__ == "__main__":

38 main()

The uvicorn module is used to launch applications listening for HTTP requests (like my FastAPI app, specified as argument).

- The application will use the specified host location (on the default port, 8000, since we did not give one as argument).

- The address

0.0.0.0is used for future compatibility with containerization (the default would be127.0.0.1orlocalhost, which also work with my choice ofhost). - Setting

reloadtoTrueensures that the server will be reloaded if any of its source files are modified.

The server could now be launched by running the command

$ python -m modules.mainin the system command line; this is however likely to fail, unless all required packages are installed on the system.

To make sure that all dependencies are installed, sem is pre-configured to be set up with poetry, a Python package manager. Specifically, the repository contains a pyproject.toml file, which lists the dependencies required by the project and other relevant information.

# pyproject.toml

1 [tool.poetry]

2 name = "sem"

3 version = "0.1.0"

4 description = "Simple expense manager"

5 authors = ["aangelone2 <adriano.angelone.work@gmail.com>"]

6 license = "GPL v3"

7 readme = "README.md"

8 packages = [{include = "modules"}]

9

10 [tool.poetry.dependencies]

11 python = "^3.11"

12 SQLAlchemy = "^2.0.20"

13 SQLAlchemy-Utils = "^0.41.1"

14 psycopg = "^3.1.14"

15 fastapi = "^0.104.1"

16 uvicorn = "^0.24.0"

17 rich = "^13.7.0"

18

19 [tool.poetry.group.dev.dependencies]

20 pytest = "^7.4.3"

21 httpx = "^0.25.1"

22

23 [tool.poetry.group.docs.dependencies]

24 mkdocs = "^1.5.3"

25 mkdocstrings = {extras = ["python"], version = "^0.24"}

26 mkdocs-material = "^9.4.11"

27

28 [tool.poetry.scripts]

29 sem = "modules.main:main"

30

31 [build-system]

32 requires = ["poetry-core"]

33 build-backend = "poetry.core.masonry.api"After some information on the project, dependencies are listed in groups (some are required by the project itself, some for development, others for documentation). By default poetry will install all groups, unless instructed otherwise.

Note how the main tool.poetry.dependencies group contains the python package: poetry will set up a virtual environment with this version of python as the interpreter, where all the packages will be installed and the program will be run (to avoid conflicts with system packages). The tool.poetry.scripts section allows to define entrypoints (as module:function) which can be launched as

$ poetry run <entrypoint>Setting a ^ at the beginning of a version number implies that versions at least as recent as the specified one should be installed, where the major number is the same (e.g., ^1.0 will install 1.2 if available, but not 2.0).

When the repository is cloned, one should run (as mentioned in the README) the command

$ poetry installThis will install the most recent versions of the specified packages which do not conflict with each other’s requirements, respecting the constraints in pyproject.toml, and setup the poetry entrypoints.

When poetry install runs for the first time (and every time the environment is changed, due to new package versions) poetry generates a poetry.lock file, which stores the actual installed versions of the packages. This is usually included in the repository (like in sem) and allows a much faster setup process, since poetry will use it on subsequent poetry install calls.

Once the poetry setup is complete, the server is run by launching it in the environment created by poetry:

$ poetry run semIf running in a folder different from the repository root directory, one should execute

$ poetry --directory <repository root> run sem We will later see how to set up automation tools (namely, a makefile) to simplify launching the server.

Once launched in this way, the server will run until manually stopped, listening for and responding to HTTP requests. Accessing, e.g., http://localhost:8000/query/ on the web browser will perform one such request, which will receive in response a list of all the expenses in the database.

Documentation

Our project will offer two documentation sets: one for the non-API modules (which uses the docstrings added in the various modules) and one for the API (which exploits the descriptions given in the FastAPI decorators). The separation between these two may look unintuitive, but in the end it is not too inconvenient, since documentation on the internals targets a different audience than the API one (server-focused and client-focused developers, respectively).

These documentation sets will be offered by documentation servers, services which a client (like a web browser) can connect to via HTTP requests, receiving in return the documentation to display.

Internals Documentation

sem uses mkdocs to generate documentation for the internals. This utility sources its configuration from the mkdocs.yml file in the project root directory, which in sem looks like

# mkdocs.yml

1 site_name: sem

2

3 dev_addr: "0.0.0.0:8001"

4

5 theme:

6 name: "material"

7

8 plugins:

9 - mkdocstrings

10

11 nav:

12 - Homepage: index.md

13 - Reference:

14 - models: reference/models.md

15 - schemas: reference/schemas.md

16 - crud_handler: reference/crud_handler.md

17 - session: reference/session.mddev_addrsets the URL at which the documentation will be available. The default is127.0.0.1(localhost) on port 8000, which I changed to 8001 to be able to browse the documentation while using the server (which uses the same port).themesets the graphical outlook of the documentation pages. Here we chose themkdocs-materialtheme, which we specified as a dependency in thepyproject.tomlfile.mkdocstringsis amkdocsplugin, which allows to automatically generate documentation pages from module, class and function docstrings.navis an organized list of documentation pages (written in Markdown). The structure of this list (including the directories in which pages are organized) will mirror the organization of the documentation website. Here, we set a main page and one reference page per module in thereference/directory (the latter will be generated from docstrings).

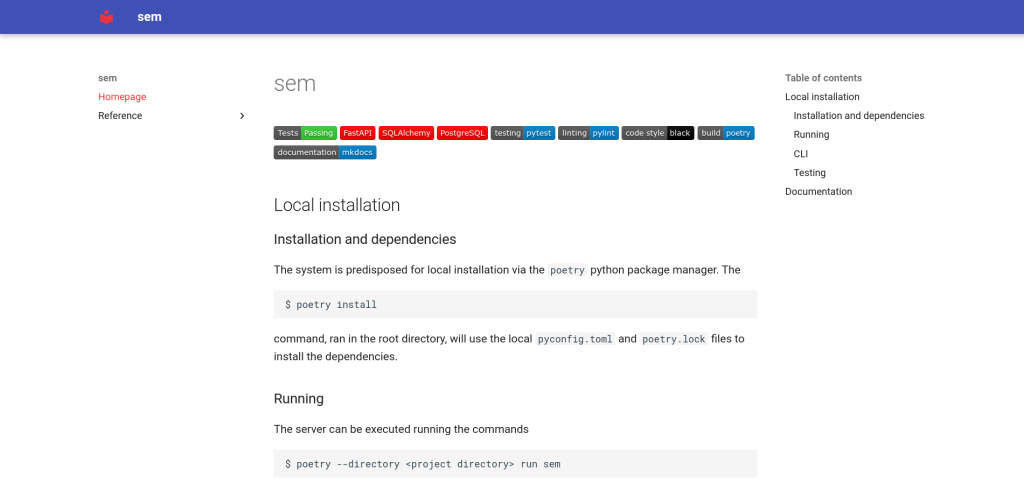

The main page usually contains general information about the project, and possibly links to the other sections of the documentation. In our case, it is simply a copy of the README file, stored in docs/index.md. The resulting page should be something like this:

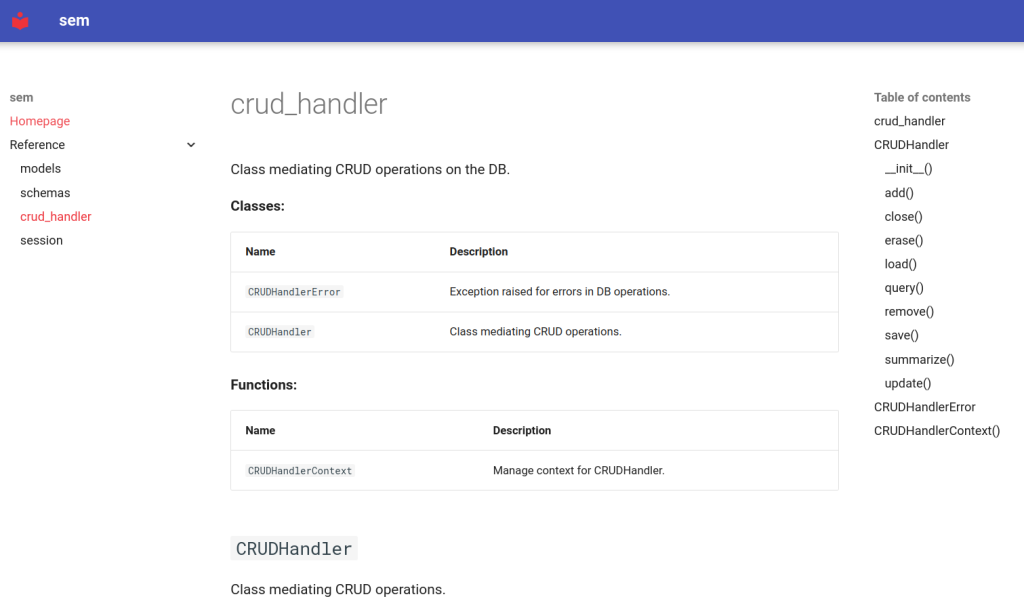

The menu on the left-side gives access to the main page and to the module pages, where functions and classes display their descriptions. These are generated from module documentation files, in the format (here, for the crud_handler.py module)

# docs/reference/crud_handler.md

::: modules.crud_handler

options:

docstring_style: numpywhich instructs the mkdocstrings plugin to use the docstrings in the specified file (I used the numpy style for docstrings, which is not the default). The resulting documentation pages should look something like

Once this setup is completed, the documentation is built and served using the commands

$ poetry run mkdocs build

$ poetry run mkdocs serveand can be accessed via a web browser at the URL http://localhost:8001.

API documentation

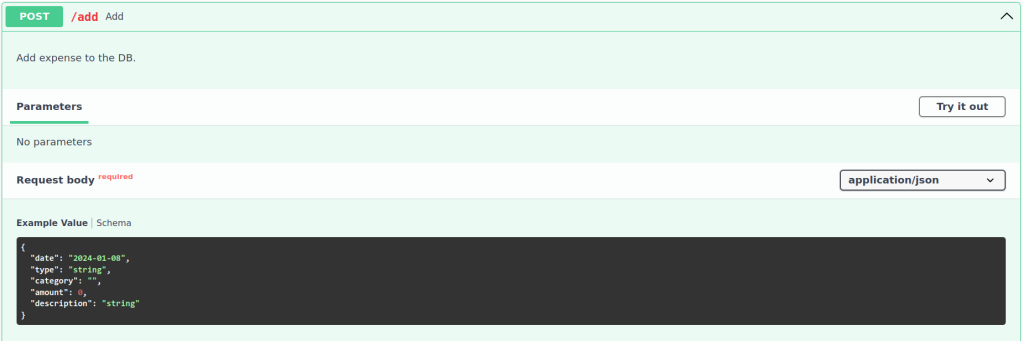

The API documentation service, builtin in FastAPI, uses docstrings only for the pydantic models present in the path functions (hence their absence from modules/api.py). Instead, it collects information on pydantic models and API functions via the descriptions in Field() specifiers and FastAPI decorators.

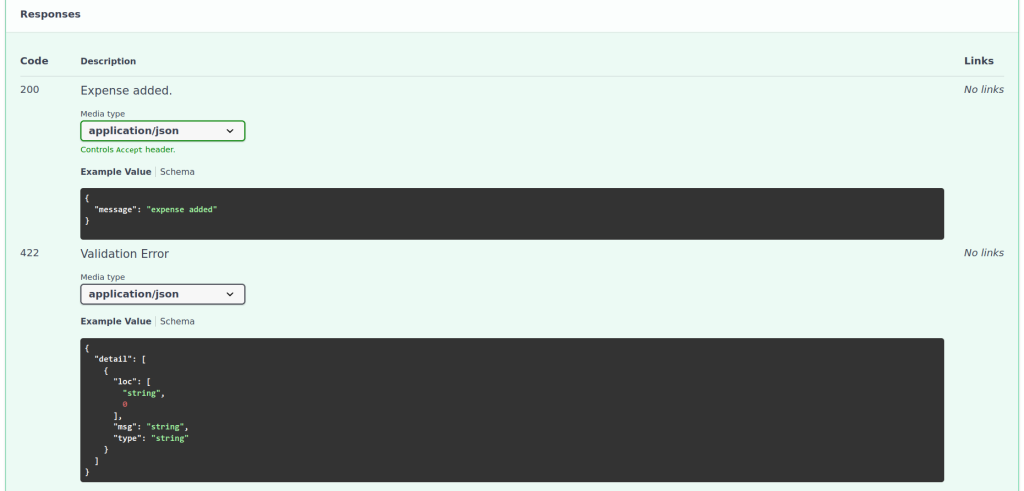

This documentation is accessible when the server is running at <URL>/docs or <URL>/redoc, where <URL> is the URL of the server (here, http://localhost:8000). The exposed page displays a list of the endpoints grouped by method and URL (below is an example screenshot of the /docs/ documentation for the /add endpoint).

The documentation will display the allowed parameters, examples of the response types, and other information, such as here for the /add endpoint.

Testing

General Concepts and Instruments

When writing applications, it is essential to write some form of testing, which ensures in an automatic way (or semi-automatic, at least) that the code does what is expected, covering a large enough number of situations and edge cases.

Different protocols for testing a program exist: here, we will mostly perform unit testing (which tests the functionality of individual parts of the program, like functions) and integration testing (which focuses on testing the interaction of program components).

We will use the pytest module to perform automatic testing, since its utilities strongly simplify test management. This framework looks for Python files whose name begins with test_; these should contain functions whose name also begins with test_, which will be executed and which will contain some check on program functionality. pytest will report detailed information on the outcome of these checks, allowing to diagnose problems if they arise.

The first component of the test suite is the tests/common.py file, which contains general utilities employed by all the tests. TEST_DB_NAME will be the name of a separate database used to store test data (to avoid polluting the main program database), while expenses is a tuple of ExpenseRead objects which will be used to fill the test database with example data.

# tests/common.py

9 from contextlib import contextmanager

10

11 from modules.schemas import ExpenseAdd

12 from modules.schemas import ExpenseRead

13 from modules.crud_handler import CRUDHandler

14

15

16 TEST_DB_NAME = "sem-test"

17

18

19 # Example expenses for testing

20 expenses = (

21 ExpenseRead(

22 id=1,

23 date="2023-12-31",

24 type="R",

25 category="gen",

26 amount=-12.0,

27 description="test-1",

28 ),

29 ExpenseRead(

30 id=2,

31 date="2023-12-15",

32 type="C",

33 category="test",

34 amount=-13.0,

35 description="test-2",

36 ),

...

61 )In order to perform realistic testing of the service layer, it is useful to fill the test database with some example data, which will be queried, updated, and so on. To simplify testing, it makes sense to erase the test database before the test is executed and after it is completed, to have a controlled testing environment. This could be done manually, but it is much cleaner to define a testing context manager to automate cleanup.

# tests/common.py

80 @contextmanager

81 def CRUDHandlerTestContext() -> CRUDHandler:

82 """Manage testing context for CRUDHandler.

83

84 Inits and inserts example data, removing all data

from table at closure.

85

86 Yields

87 -----------------------

88 CRUDHandler

89 The context-managed and populated CRUDHandler.

90 """

91 # Easier to define a new context manager,

92 # should call erase() in __enter__() and __exit__().

93 ch = CRUDHandler(TEST_DB_NAME)

94 ch.erase()

95

96 for exp in expenses:

97 # ID field ignored by pydantic constructor

98 ch.add(ExpenseAdd(**exp.model_dump()))

99

100 try:

101 yield ch

102 finally:

103 ch.erase()

104 ch.close()This is very similar to CRUDHandlerContext(), with the difference that 1) data is inserted in the test database before yielding, in an emptied test database, and 2) the database is erased before closing the context.

In theory, this could be replaced by mocking the database, i.e., replacing database access with a function yielding expense data, bypassing the database. This would allow a stricter unit testing of the service layer, since no interaction with the data layer would take place. However, I deemed the testing functions below, which are essentially integration tests of data and service layer, good enough to diagnose problems in the individual service layer as well.

CRUDHandler Tests

Our first tests will concern the CRUDHandler class, checking that its methods perform as intended in several cases. These are performed in the tests/test_crud_handler.py file: we will not list the entirety of the file here, but only display a few tests where useful tools are presented.

The first test shown here tests the query() method, by performing a filter-less search (we omitted for similarity the following tests, which also apply filters and check that only matching expenses are returned).

# tests/test_crud_handler.py

6 from fastapi.encoders import jsonable_encoder

7

8 from pytest import raises

...

13 from modules.schemas import QueryParameters

14 from modules.crud_handler import CRUDHandlerError

15

16 from tests.common import expenses

...

18 from tests.common import CRUDHandlerTestContext

19

20

21 def test_global_query():

22 """Tests no-parameter query."""

23 with CRUDHandlerTestContext() as ch:

24 # retrieve all expenses

25 res = ch.query(QueryParameters())

26 expected = [

27 expenses[4],

28 expenses[3],

29 expenses[2],

30 expenses[1],

31 expenses[0],

32 ]

33

34 assert jsonable_encoder(res) == jsonable_encoder(expected)

...The test function is run within the CRUDHandlerTestContext() context, and should return the inserted data. Direct output from the query is then compared to a list of the inserted entries, sorted by date (to match the output of the query() method).

As in all pytest test functions, an assert directive allows to compare expected output with actual one. jsonable_encoder() serializes the compared expressions, and is used here to avoid precision issues when comparing for equality the floating-point fields of the two sides.

A more complex test, displayed below, is the one for the remove() function. Two existing expenses are removed and the database is queried, to check that they disappeared and the others were left untouched; then, the deletion of a non-existent expense is attempted.

352 def test_remove():

353 """Tests removal function."""

354 with CRUDHandlerTestContext() as ch:

355 # Selective removal

356 ch.remove([3, 1])

357

358 res = ch.query(QueryParameters())

359 expected = [

360 expenses[4],

361 expenses[3],

362 expenses[1],

363 ]

364

365 assert jsonable_encoder(res) == jsonable_encoder(expected)

366

367 # Nonexistent ID

368 with raises(CRUDHandlerError) as err:

369 res = ch.remove([19])

370 assert str(err.value) == "ID 19 not found"

371

372 # Checking that no changes are committed in case of error

373 res = ch.query(QueryParameters())

374 assert jsonable_encoder(res) == jsonable_encoder(expected)

...At line 369, the attempted removal of a non-existent QueryParameters should result in a CRUDHandlerErrorpytest.raises() function creates a context in which exception-raising instructions can be enclosed without making the program crash (indeed, an exception of that type becomes required to make the test succeed). I then compare the message of the error with the expected one (by accessing the value attribute of the exception).

API Tests

The tests/test_api.py file contains tests to be performed through the API. These will in turn involve the service and data layer, which are tested independently above. Due to the more involved process of testing through the API, some preliminary declarations are required.

6 from fastapi.testclient import TestClient

7 from fastapi.encoders import jsonable_encoder

8

9 import pytest

10

11 from modules.schemas import ExpenseRead

12 from modules.crud_handler import CRUDHandler

13 from modules.crud_handler import CRUDHandlerContext

14 from modules.api import app

15 from modules.api import get_ch

16

17 from tests.common import TEST_DB_NAME

...

19 from tests.common import expenses

20 from tests.common import CRUDHandlerTestContext

21

22

23 def get_test_ch() -> CRUDHandler:

24 """Yield CRUDHandler object for testing purposes.

25

26 Pre-populated with some expenses, linked to "sem-test" DB.

27

28 Yields

29 -----------------------

30 CRUDHandler

31 The CRUDHandler object.

32 """

33 with CRUDHandlerContext(TEST_DB_NAME) as ch:

34 yield chget_test_ch() is a function which acts like get_ch() in the API module, yielding a CRUDHandler object connected to the testing database. Its use will become apparent from the next function:

37 @pytest.fixture

38 def test_client():

39 """Construct FastAPI test client."""

40 app.dependency_overrides[get_ch] = get_test_ch

41 return TestClient(app)There is a lot to unpack here.

- Fixtures, declared via the

@pytest.fixturedecorator, are functions returning objects which can be used in test functions, where they are passed as arguments. Contexts (likeCRUDHandlerTestContext()above) may still be preferable if some cleanup action has to be performed, however. app_dependency_overridesis a dictionary attribute ofFastAPIobjects. Its keys are the current dependencies of the object, while its values will be overriding dependencies, which will replace them in the rest of the module.

By default, the dictionary is empty, and no overrides are performed; here, we are instructing FastAPI to replaceget_ch()withget_test_ch()when the former is called in the endpoints. Then, the API will access the test database instead of the main one.TestClientis a FastAPI object which acts as an HTTP client, allowing to send requests to the server and receiving responses. This object will be employed in the testing functions below, as a result of passing the fixture as a parameter.

Our first example of API testing will be the test of the add() API, where a new expense will be added to the test database, which will then be queried to verify that the procedure went smoothly.

152 def test_add_api(test_client):

153 """Tests adding function."""

154 with CRUDHandlerTestContext():

155 # Skipping category

156 new_exp = {

157 "date": "2023-12-12",

158 "type": "M",

159 "amount": -1.44,

160 "description": "added via API",

161 }

162 response = test_client.post("/add", json=new_exp)

163

164 assert response.status_code == 200

165 assert response.json() == {"message": "expense added"}

166

167 response = test_client.get("/query?types=M")

168

169 expected = [

170 expenses[2],

171 ExpenseRead(id=6, **new_exp),

172 ]

173

174 assert response.status_code == 200

175 assert response.json() == jsonable_encoder(expected)Here, a new expense is created (in JSON format, since the request will require a JSON body) and passed to the post() method of the TestClient (the called HTTP method should be the same of the endpoint). The path is given as positional argument, while the body is passed via the json argument.

The status code and body of the response (via its status_code and json attributes) are checked against the expected value. The TestClient.get() method is then called on the /query URL (note the passed query parameters) and the response is checked against the expected value.

Our final test example will be the one for the removal API: here, we need to manage the case in which errors are returned.

432 def test_remove_api(test_client):

433 """Tests removing request."""

434 with CRUDHandlerTestContext():

435 # Selective removal

436 response = test_client.delete("/remove?ids=3&ids=1")

437

438 assert response.status_code == 200

439 assert response.json() == {"message": "expense(s) removed"}

440

441 response = test_client.get("/query")

442

443 expected = [

444 expenses[4],

445 expenses[3],

446 expenses[1],

447 ]

448

449 assert response.json() == jsonable_encoder(expected)

450

451 # Inexistent ID

452 response = test_client.delete("/remove/?ids=19")

453 assert response.status_code == 404

454 assert response.json() == {"detail": "ID 19 not found"}

...As mentioned above, HTTPExceptions are not real exceptions in the Python sense: as a result, pytest.raises() is not required, and the request still returns a response, whose status code and response body are checked against the expected value.

The test suite described above is run by executing

$ poetry run python3 -m pytest -x -s -v .The options are not strictly necessary, but are in my opinion helpful:

-vadds a useful level of verbosity;-xstops execution at the first failed test;-sprints to screen the otherwise hidden program output.

pytest will detail which tests failed and which succeeded, function by function, and offer additional information in case of failure.

What’s Next

In this post, we understood how to execute a server program, manage its dependencies, offer and browse documentation and perform testing.

In the next installment in this series, we will learn how to quickly deploy our server via docker containers, and how to write a simple yet effective command-line interface to access it.

Author: Adriano Angelone

After obtaining his master in Physics at University of Pisa in 2013, he received his Ph. D. in Physics at Strasbourg University in 2017. He worked as a post-doctoral researcher at Strasbourg University, SISSA (Trieste) and Sorbonne University (Paris), before joining eXact-lab as Scientific Software Developer in 2023.

In eXact-lab, he works on the optimization of computational codes, and on the development of data engineering software.